We attended TechXLR8, the flagship event of London Tech Week, at London’s ExCeL centre. On day one, representatives from Microsoft and Facebook took to the stage to discuss their approaches to artificial intelligence and how they have been and will be using it in their projects, products, and services.

Michael Wignall, Microsoft

Michael Wignall, Director of Cloud and Enterprise at Microsoft, the department responsible for services such as Microsoft Azure, discussed the recent advances that the tech industry has seen in machine learning and AI in general, stating that, since 2016, the industry has seen individual implementations of machine learning algorithms have been just about exceeding the capability of humans in tasks such as image recognition, reading comprehension, and audio transcription. But now, in 2019, with the advances in cloud computing we’ve seen over the last three years, including the great increase in computing resources available through services like Microsoft’s own Azure and competitors such as Amazon Web Services, what can we expect of AI in the years to come?

Microsoft has invested over 150 million US dollars towards seeing AI being used practically in tasks of all sorts, such as increasing accessibility for people, maintaining the Earth’s environment, and facilitating humanitarian aid. Microsoft is calling this project the AI for Good Initiative.

Regarding tackling the issue of upholding ethics when using AI, Microsoft has prescribed themselves six core principles which they are striving to abide by when developing their AI projects:

In particular, they focused on the issues of transparency and accountability; whilst current implementations of AI, such as those created via machine learning, are able to classify on par with humans, they are not transparent as to why they make a given decision. For example, an AI trained to classify images of animals as either being an image of a dog or an image of something other than a dog may very well exhibit 99% accuracy in doing so, but if you tried to ask such an AI why it thinks that picture of your beloved husky is, in fact, a dog, and not a great white shark… good luck getting an answer.

As for accountability, consider a surgeon who deviates from the standard of care required during an operation: should an AI trained to perform live surgery that deviates from the required standard of care be held accountable for its misdemeanour? If so, who takes the brunt?—the developers of the AI? The parent company? Some other related party? These are the sorts of scenarios that Microsoft is hoping to develop answers to that the AI industry as a whole will begin to agree with, and Wignall in particular has suggested that businesses developing AI and using it at scale should devise their own set of core ethical principles to abide by, analogous to Microsoft’s own six principles.

Nicola Mendelsohn, Facebook

Nicola Mendelsohn, Vice President of Facebook for Europe, the Middle East, and Africa, took to the stage afterwards to discuss Facebook’s use of AI both now and in the future. The social media giant shared their plans to roll out image recognition features to the platform very soon, allowing users who are blind or visually impaired to hear a description of images shared by their friends.

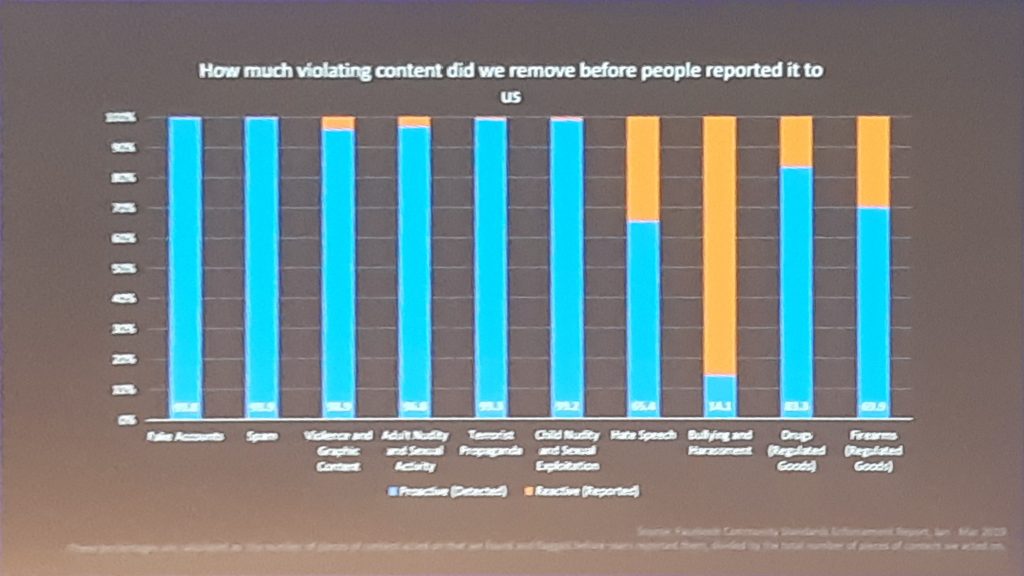

Facebook is also experimenting with a new AI called “Kraken”, designed to classify the content of posts on the platform that include images. Its goal is to determine whether content posted by users is unsavoury or against Facebook’s terms of use, such as posts advertising the sale of cannabis with an image of the drug, under the guise of being “Memorial Day deals”, fooling Facebook’s existing text-only post filtering system. “We need to make sure that we are doing everything in our power the stay one step ahead,” said Mendelsohn on the issue, lest users simply work around the AI tools used to classify and subsequently remove infringing or undesirable content.

Mendelsohn discussed how Kraken has displayed good results in testing so far; they have been flagging content for removal without actually removing it to test the efficacy of the system, and then comparing this to whether this content has been reported by end-users. They have seen over 95% effectiveness in 6 out of 10 content types, with only content related to bullying and harassment being detected with less than 65% accuracy, at just 14%.

The company will be opening a new office on London’s Shaftesbury Avenue, bringing in 500 new jobs; in addition to their existing offices in Fitzrovia and Paddington, Facebook will have over 3000 employees within London.